You may have found Profile Attributes to be a useful addition in Siebel 7 as a better way to use global variables. The most common use of profile attributes is exactly that. You use the script expression:

TheApplication().SetProfileAttr("CurrentLogLevel", 5);

This variable can then be referenced elsewhere in script:

var iLogLevel = TheApplication().GetProfileAttr("CurrentLogLevel");

Alternatively, you can get this value in a calculated field using just:

GetProfileAttr("CurrentLogLevel")

OK, so that is pretty elementary as it is

documented pretty well in bookshelf. This assumes a relatively dynamic value of the global variable, one that is determined programatically during a user session, and whose value is lost when the session ends. But what if you want to reference a user attribute that persists. There are already a couple of specialized functions that reference some user attributes:

PositionName()

LoginName()

LoginId()

PositionId()

But what if you want to add your own? So here is my requirement. I want to store a custom logging level attribute that can be set on a user by user basis. I will go into more detail in future posts about what I might want to do with this value. So the first thing to do is to either find a place to put this value or to extend a table to make a place. I prefer the latter, so I am going to add a new number column, X_LOG_LEVEL to the S_USER table:

This field should then be exposed in the Employee BC (it could alternatively be exposed in the User BC if an eService or eSales application was in play but I am going to keep this simple for now). Create a new single value field to expose this column (I added some validation too):

Now, in order to actually use this value, I will need to expose it in the GUI. So lets put it in the Employee List applet so it will be visible in the Administration - User -> Employees view.

Once the list column exists, edit the web layout and add this control to the list in the Edit List mode. Next, I am going to expose this field in a very special BC,

Personalization Profile. This BC is instantiated when the user logs in and its fields basically represent all the potential attributes of the logged in user including the User, Employee, Contact, Position, Division, and Organization. As I will show in a minute, its fields are referenceable as profile attributes. There is already a join in this BC to the S_USER table so just create a new SVF to expose this column.

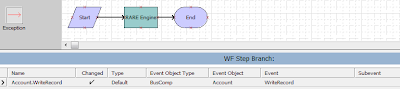

Finally lets add some script to the Application Start event for the application you are using. What I want to do here is set a global variable type profile attribute as I described in the beginning of this post called CurrentLogLevel to the value on the User record (which I get from the Personalization Profile) if one was set, otherwise to set it to a constant value. Then if the logging level is sufficiently high, start application tracing and push a line to that new file:

var sLogLevel = 3;

if (TheApplication().GetProfileAttr("User Log Level") != "")

TheApplication().SetProfileAttr("CurrentLogLevel",

TheApplication().GetProfileAttr("User Log Level"));

else TheApplication().SetProfileAttr("CurrentLogLevel", sLogLevel);

if (TheApplication().GetProfileAttr("CurrentLogLevel") > 4) {

TheApplication().TraceOn("Trace-"+TheApplication().LoginName()+".txt", "Allocation", "All");

TheApplication().Trace("Application Started");

}

Ok. Now compile everything. The base case is with no user log level set. When you open this application, the application start event will trigger and since I have not done anything yet with my user log level, it will default to 3 and no trace file will be created. You'll have to trust me so far. Next navigate to Administration - User -> Employees, and query for your login. Right click to show the columns, and move User Log Level to the Selected Columns list. Now set this field value to 5.

Log out, and log back in. You will now find a file in you \BIN directory called Trace-

SADMIN.txt (I obviously logged in as SADMIN but the filename is dynamic as well) with the line:

Application Started.

Done. I have used this for a log attribute I will talk about more in future posts but you can use this feature to store any type of data on a table linked to the logged in user. This is sometimes useful for referencing functional information relating to certain business processes. You could use an attribute from this BC in a calculated field on a different BC to determine whether a record on that BC should be read only for instance. Good luck